- Home

- Blog

Cloud-Native AI Platforms - Redefining Enterprise Innovation

As enterprises move beyond traditional IT, cloud-native AI platforms are rapidly emerging as the backbone for digital transformation. By combining AI/ML capabilities with cloud-native architectures (containers, Kubernetes, microservices), businesses are unlocking agility, scalability, and intelligence at an unprecedented scale.

Future Outlook: The rise of GenAI-as-a-Service will dominate enterprise innovation, enabling organizations to consume AI-driven services (like copilots, generative analytics, and autonomous decision-making engines) directly from the cloud without heavy infrastructure investments.

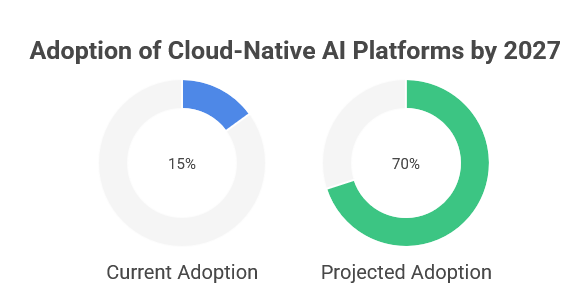

Industry Insight

- Gartner predicts that 70% of enterprises will adopt cloud-native AI platforms by 2027, up from less than 15% today.

- Cloud hyperscalers (AWS, Azure, GCP) are embedding pre-trained GenAI models into their native services, reducing time-to-market for AI solutions by 40–60%.

- Leading sectors adopting cloud-native AI: Banking (fraud detection), Healthcare (drug discovery), Retail (personalized shopping), and Manufacturing (predictive maintenance).

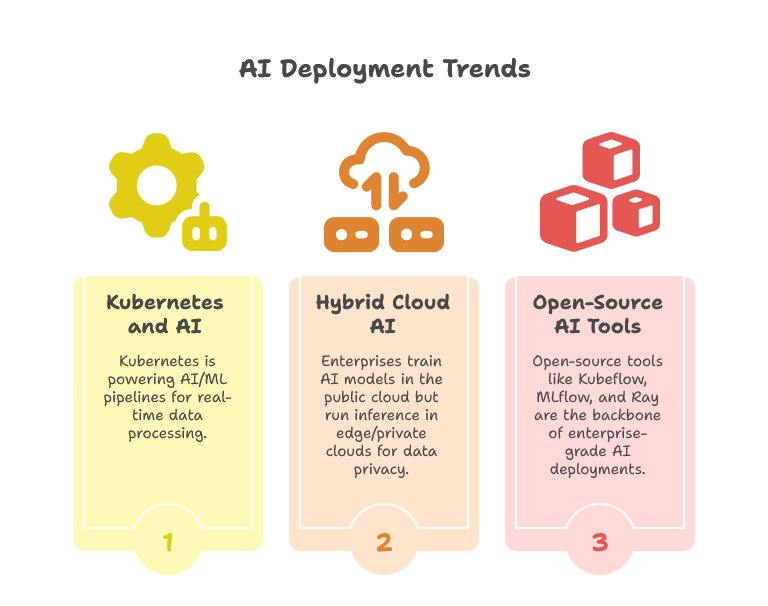

Did You Know?

- Kubernetes + AI = The perfect duo! Kubernetes is not just for app scaling; it’s now powering AI/ML pipelines for real-time data processing.

- Hybrid cloud AI adoption is increasing: enterprises are training AI models in the public cloud but running inference in edge/private clouds for data privacy.

- Open-source tools like Kubeflow, MLflow, and Ray are now the backbone of enterprise-grade AI deployments.

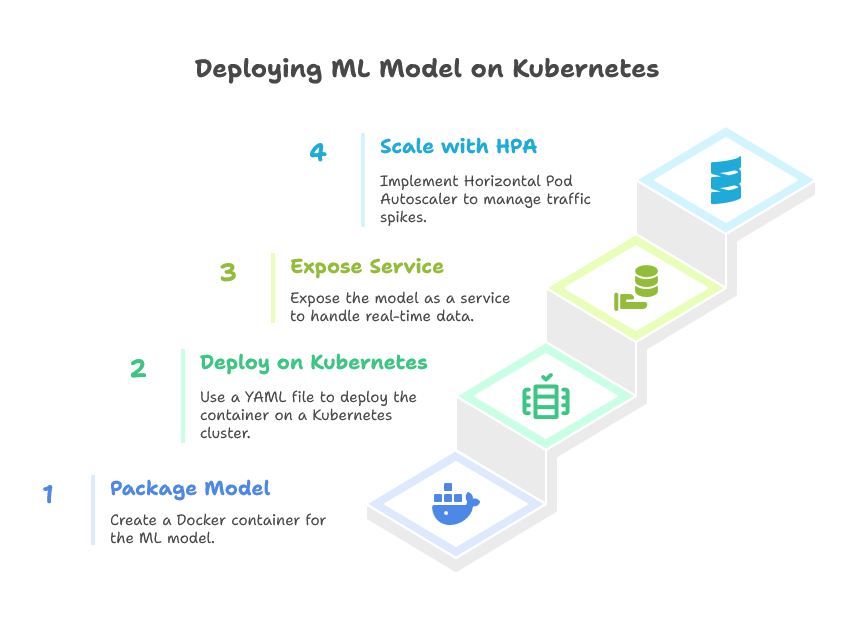

Hands-On Corner: Deploying AI on Cloud-Native Infrastructure

Mini Walkthrough: Running a Sentiment Analysis Model on Kubernetes

- Package your ML model (e.g., a BERT-based sentiment classifier) into a Docker container.

- Deploy it on a Kubernetes cluster using a YAML deployment file.

- Expose it via a Kubernetes service and test it with real-time data streams (e.g., tweets, customer feedback).

- Scale pods automatically with Horizontal Pod Autoscaler (HPA) to handle sudden traffic spikes.

👉 Impact: Real-time, auto-scalable AI services that adapt instantly to business demand.

Mini Case Study: Retail Giant Embracing Cloud-Native AI

A global retail brand struggled with customer churn due to outdated personalization strategies. By moving to a cloud-native AI platform:- They deployed an AI-powered recommendation engine on Kubernetes clusters.

- Integrated real-time purchase + browsing data from cloud-based data lakes.

- Personalized offers in under 50ms for millions of users worldwide.

Result:

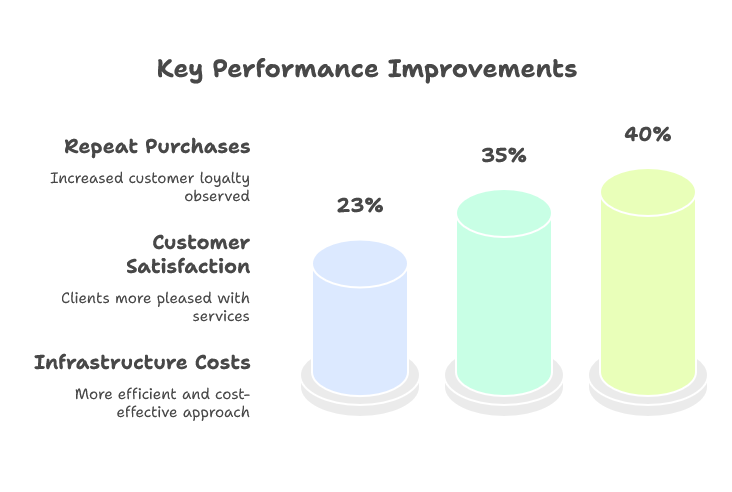

- 23% increase in repeat purchases

- 35% improvement in customer satisfaction

- Reduced infrastructure costs by 40% compared to legacy systems

🗣️ Quote of the blog

“Cloud-native AI isn’t just about technology – it’s about building a self-evolving enterprise that learns, adapts, and innovates continuously.”

— Satya Nadella, CEO of Microsoft

Corporate Training & Certifications :

To help enterprises adopt and scale Cloud-Native AI, we provide:

- Corporate Training Programs on DevOps, Cloud, AI/ML, and Generative AI for enterprise teams.

- Customized Hands-on Workshops: Deploying AI models on Kubernetes, CI/CD for AI pipelines, Cloud Security, and more.

- DevOps Certifications by DevOps University – empowering professionals to lead future-ready digital initiatives.